(Image via Getty)

The latest U.S. News and World Report law school rankings stumbled into our laps earlier this month. We say, “stumbled” as the release was marked by multiple last-minute edits to the methodology. Nothing instills confidence like changing the whole formula three times in the last weeks!

But putting aside public flops like the failed diversity rankings or the deep methodological concerns that drive our own Top 50 Law Schools ranking, there’s actually a lot about the U.S. News Rankings that are wrong even if you accept their own methodological choices. Professor Corey Rayburn Yung of the University of Kansas School of Law decided to break down the latest USNWR rankings without fundamentally altering their assumptions. In other words, to interrogate the rankings on their own terms.

Decrypting Crypto, Digital Assets, And Web3

"Decrypting Crypto" is a go-to guide for understanding the technology and tools underlying Web3 and issues raised in the context of specific legal practice areas.

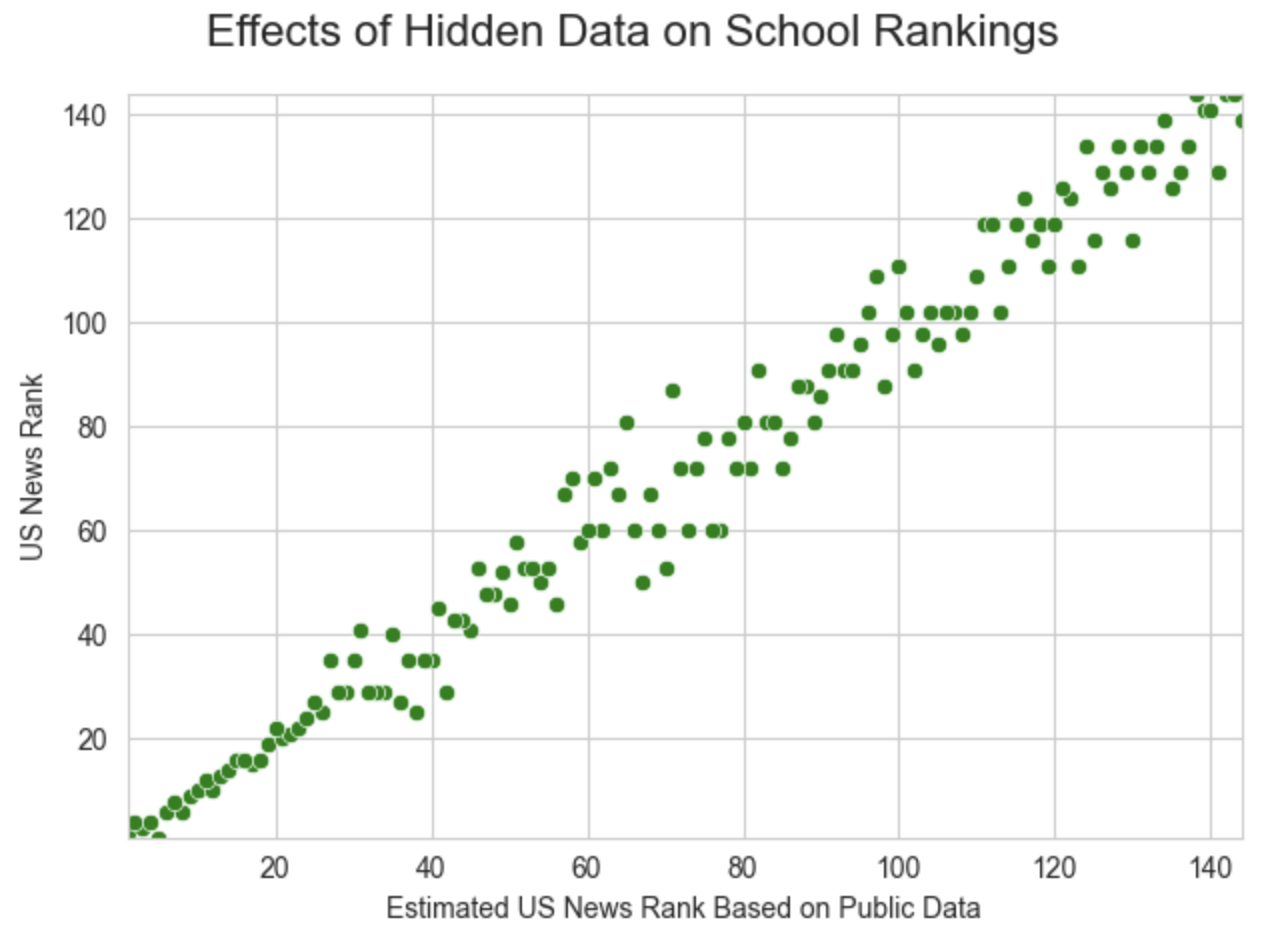

Though the first caveat to this endeavor was the fact that almost 12 percent of the rankings rely on non-public data. Some of these data points can be reverse engineered from data publicly released by the law schools themselves, but the fact that USNWR isn’t divulging a significant component driving its rankings is one hell of a credibility problem, and one confirmed by plugging in the public data. Check this out:

Basically somewhere around the 30th ranked school things start to get awfully janky. Not coincidentally, that’s roughly the drop-off point where schools start to get desperate about their ranking position and, consequently, more prone to futzing with their responses.

But just taking the data that exists there are pronounced issues with USNWR’s results. For example, here’s something you may not know about the process:

Skills That Set Firms Apart

Legal expertise alone isn’t enough. Today’s most successful firms invest in developing the skills that drive collaboration, leadership, and business growth. Our on-demand, customizable training modules deliver practical, high-impact learning for attorneys and staff—when and where they need it.

US News forces its underlying data into a normal distribution even if the actual data exhibits a different distribution.

That just seems unnecessary. It turns out that the impact of this isn’t all that bad. Ultimately it only moved schools a couple of places at worst because the effects tended to balance each other out, but why even introduce this problem? Professor Rayburn Yung worked around the issue by scaling each category using percentages instead of standardizing them.

Have you ever considered how USNWR considers bar exam passage rates? Because it’s not how law schools themselves do it!

So it’s measured out of the jurisdiction where the plurality of students chose to take the test. Which means law schools where the top students head to New York and happen to represent a plurality that year would inflate the bar passage stat more than if, say, the bulk of students went to California and struggled with that notoriously rough cut score. And regional schools are locked in zero sum battles where there’s no hope of improving passage rates without other schools collapsing. Just using the well-known 10-month employment stats for J.D.-required jobs provides a better proxy for the recalculation.

Other ridiculous stats like the acceptance figures that schools game by “encouraging fee-waived applications from students that will not attend or be accepted” and employment at graduation which cannot address contingent offers all got cut to leave just the core data.

Which he used to create a new ordinal ranking even though ordinals are weak for communicating quality.

If a school dropped from 14th to 15th, social media and legal publications would treat it as the most significant news story of the day (or month).

This is certainly something we couldn’t avoid discussing at Above the Law, though we did discuss why we thought the “T14” metric was a bad way of gauging the relative merits of UCLA and Georgetown… and indeed the schools within two or three places of them in either direction.

So what did the new calculation based on USNWR’s core metrics but substituting better data reveal? Massive movement from a number of mostly regional public schools — places like New Mexico, Hawaii, and West Virginia — taking big leaps in the rankings. But there were also big declines from schools like Kentucky, Nebraska, and Oklahoma. UCLA is still in the top 14, but Yale plummets to fifth and Columbia moves into second behind Stanford.

It’s a reminder that building a ranking system is a lot of work. Our rankings developed from fundamentally rethinking the law school ranking game, but just duplicating the U.S. News model with improved data can create a wildly different ranking regime. It’s why moving one or two places within these rankings shouldn’t be given outsized significance. The 25th school is probably not much different than the 20th or the 30th. Eye schools in the same general area and then drill down with your own research.

But, fine. Go ahead and make fun of Georgetown if you want.

Evaluating the 2022 US News Law School Rankings on its Own Terms

Earlier: The 2022 U.S. News Law School Rankings Are Here

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.