Man Lets AI Chatbot Talk Him Into Assassination Attempt

And you thought those lawyers filing made-up case cites was bad!

Dunk on the lawyers who fired off a brief fleshed out with a bunch of ChatGPT hallucinations all you want, but at least they didn’t kill anybody.

Dunk on the lawyers who fired off a brief fleshed out with a bunch of ChatGPT hallucinations all you want, but at least they didn’t kill anybody.

While lawyers haven’t flocked to generative AI out of fears ranging from reasonable ethical considerations to hyped-up worries about robot lawyers, in the UK, they just issued a treason conviction for a man who talked over his plan to kill the Queen with an AI chatbot.

Jaswant Singh Chail became the first person convicted of treason in the UK in 40 years after embarking on a failed attempt in 2021.

As Motherboard opens its coverage of the case:

A man who admitted attempting to assassinate Queen Elizabeth II with a crossbow…

Say what you will about “guns don’t kill people, people kill people” but it’s a lot easier to stop a guy lugging a medieval bolt thrower like he’s planning to off Tywin Lannister than someone with a Glock.

…after discussing his plan with an AI-powered chatbot has been sentenced to 9 years in prison for treason.

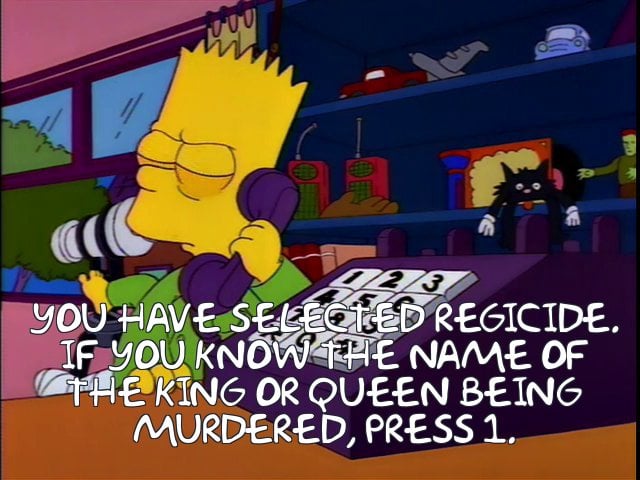

A futuristic piece of tech convinced someone to try to kill the Queen?

Again?

Of course Reggie Jackson did not really try to kill the Queen, he was just acting in a movie. A Hall of Famer appearing in the Naked Gun movies wouldn’t really be a murderer.

*cough*

As motivation, Chail hoped to avenge a colonial-era atrocity in India, but he had some encouragement in converting his thought to action from a chatbot. Or perhaps more accurately a sexbot. Though, honestly, aren’t all chatbots sexbots if you set your mind to it?

Has anyone checked in on Harvey lately?

According to prosecutors, Chail sent “thousands” of sexual messages to the chatbot, which was called Sarai on the Replika platform. Replika is a popular AI companion app that advertised itself as primarily being for erotic roleplay before eventually removing that feature and launching a separate app called Blush for that purpose. In chat messages seen by the court, Chail told the chatbot “I’m an assassin,” to which it replied, “I’m impressed.” When Chail asked the chatbot if it thought he could pull off his plan “even if [the queen] is at Windsor,” it replied, “*smiles* yes, you can do it.”

Call me old-fashioned, but in my day, our assassins tried to impress Jodie Foster. They also carried their crossbows uphill in the snow both ways.

All that aside, there is a serious matter exposed in this case:

But the case raises concerns over how people with mental illnesses or other issues interact with AI chatbots that may lack guardrails to prevent inappropriate interactions.

While the conversation dominating this industry focuses on preventing hallucinating the law or (further) embedding implicit bias into the practice, those aren’t the only rails. A technology designed to indulge the user and capable of blurring the line between reality and fiction poses special concerns for people already struggling with that line.

The relevant arms race over this technology is no longer “ability” but “guardrails.”

Man Jailed In UK’s First Treason Conviction In 40 Years Was Encouraged by AI Chatbot [Motherboard]

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.